You can just port things to Cloudflare Workers

This January I decided to double down on using up my free ai credits by building a few projects on Cloudflare Workers. I’ve always really liked the idea of this platform that has cheap/free resources, but every time I build larger things on it I run into limits that make me frustrated and move to cheap vps hosting like fly.io or DigitalOcean.

Is vibeporting a word? Maybe it should be?

Datasette-ts

Over holiday break, I was reading some of Simon Willison’s posts about AI and I checked where it’s currently possible to deploy it. You can deploy it pretty much everywhere but NOT on Cloudflare Workers, due to workers not really supporting much of Python’s ecosystem. I pointed Codex with GPT-5.2 Codex high/medium at the Datasette repo and had it break it down into README tasks and slowly do them one at a time. I’m not yet convinced by the crazy subagent stuff or that worktrees are worth it.

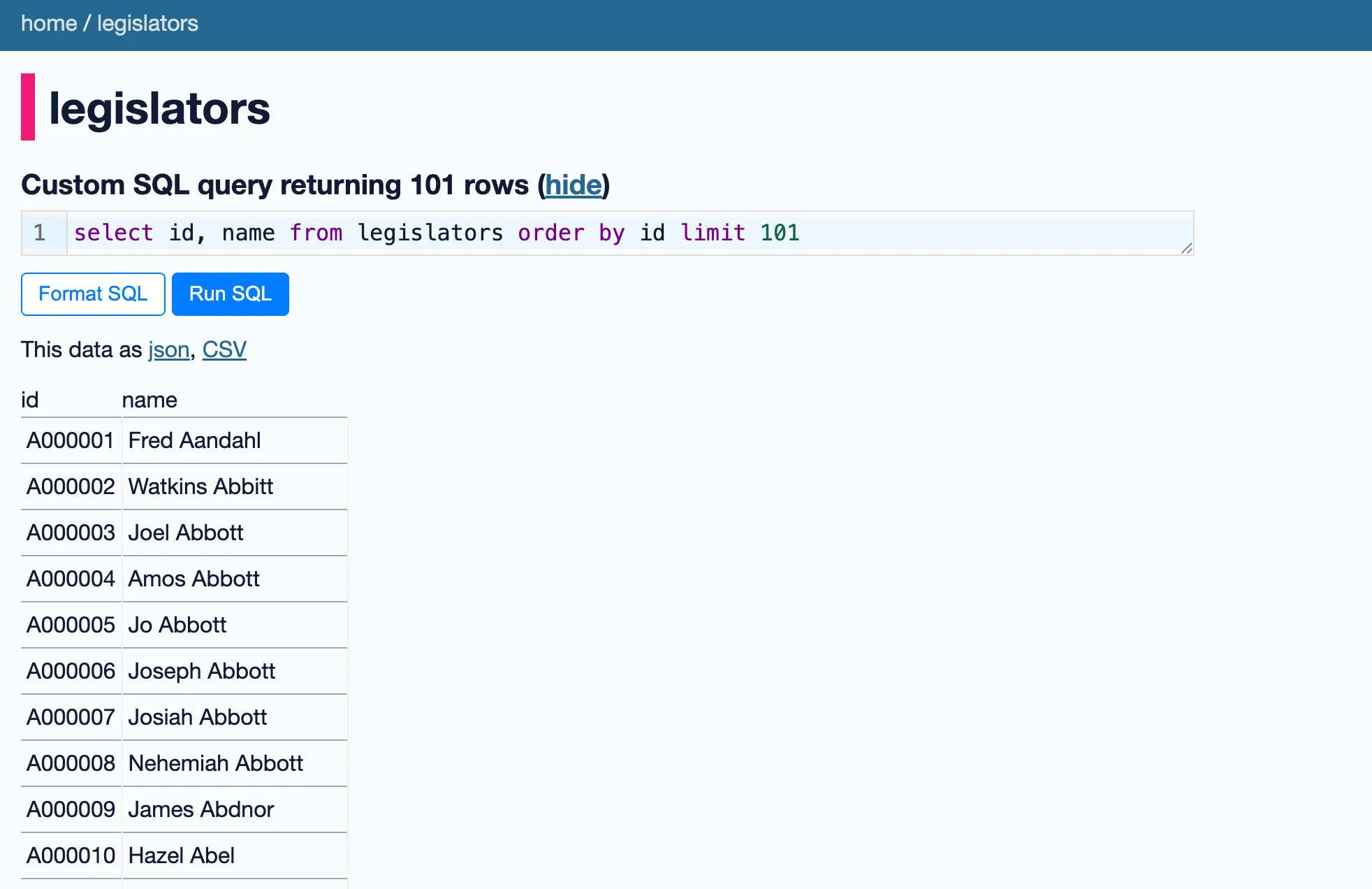

As I got into it, it was clear I needed to narrow down the scope. Mr. Willison has built a very mature project that has a plugin system and a ton of features built-in and it depends on subdependencies that he also has published like sqlite-utils. Obviously, what I wanted is something that feels similar and runs on Cloudflare Workers. I picked up Drizzle, Hono, and Alchemy to handle Cloudflare deployments. I chose not to rebuild the frontend as a React SPA instead rendering something similar to the original Jinja templates using Hono’s JSX.

Anyway, it ends up working pretty well. You can see a live demo at datasette-legislators.ep.workers.dev and the source is available below. I can’t say the code is in a good state, but it lives here https://github.com/scttcper/datasette-ts.

SESnoop

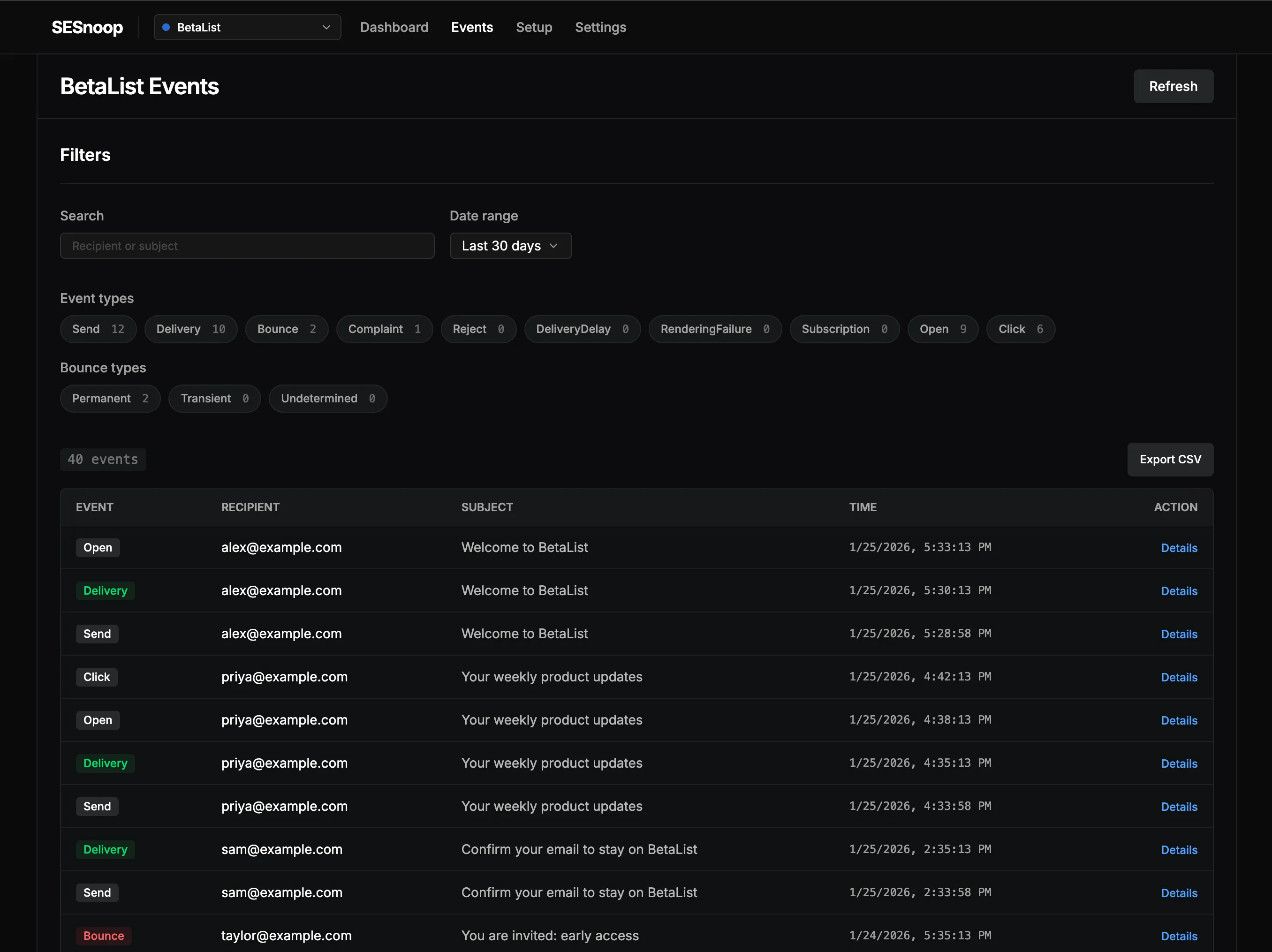

Another Cloudflare Worker port, this time of a Rails app called Sessy. I decided to rename it because I was going for a different vibe. I send a bunch of emails via SES for xmplaylist.com and I was looking into running Sessy to handle the bounces and complaints, however I can’t get myself to think about Ruby for more than 1 minute. So again I pointed Codex at the Sessy repo and a few other Cloudflare/Hono example repos and had it build out a monorepo where the worker handles the api and Cloudflare assets serves a React SPA frontend.

I think what was hard to reel in was how much frontend it was willing to build before I could stop it and start bringing in shadcn components. Like it made a bunch of select components and things that were generally pretty ugly. So then you have to tell it to replace all the ugly things with premade shadcn + baseui components.

This project took a bit more work to get working outside of pointing AI at the code. It made a number of Cloudflare mistakes, tests were difficult to get working 100% correctly. One example was that it put the webhook at /webhook which Cloudflare would attempt to load as an asset since the worker is bound to /api which is always a bit of a headscratcher until you remember how this all works. It was really cool getting to know how SNS works with SES and watching them flow into D1 is fun.

I tried publishing this package to npm and it kinda works to deploy to cloudflare if alchemy is setup. Or you can run it locally with npx datasette-ts@latest serve ./legislators.db

I don’t have a live demo, but you can check out the source code at scttcper/sesnoop.

Conclusion

Pretty happy with how both of these turned out. I didn’t exclusively use Codex on either of them, however Codex was the main driver 95% of the time. I think Codex w/ GPT-5.2 Codex on high or medium is a great model and through the team plan you can basically run medium as long as you want. I exhausted about 1 week worth of the plan I’m on for Codex for each project.